Autonomous systems

Professor David Lane chaired the event on Innovation in Autonomous Systems held at the Royal Academy of Engineering in June

From the automatons of science fiction to the reliable workhorses of industrial production and process tasks, the concept of machines that work on their own is not new.

Recently, however, there have been big shifts in how engineers approach autonomous systems. Those changes are reflected in a subtle change in vocabulary. Automation, the application of advanced mechanisation and control technologies to specific tasks formerly undertaken by people – if undertaken at all – remains an important element of industry’s competitiveness. But increasingly, ‘autonomy’ is being used across a wider span of activities.

Autonomous systems link advances in sensors, control and processing to physical devices and machines. Rather than automating a single action or activity, these advances enable a range of responses that vary with context. So the machine adapts its behaviour without direct human intervention at the actual point of decision. Each decision is based on algorithms that set the boundaries for behaviour and also determine the onboard processing of inputs from internal and external sensors.

“Autonomy is about making dumb devices smart,” said Professor David Lane, founder and director of the Edinburgh Centre for Robotics, who chaired the Academy event. The definition of autonomy is very broad. It runs, in Professor Lane’s view, from the modern upmarket domestic washing machine, which has increasingly complex cycles that enable it to ‘decide’ on an appropriate wash, all the way through to unmanned aircraft and driverless vehicles.

Autonomy is about making dumb devices smart

Professor David Lane

These are the headline-making outliers of a trend whose possibilities are attracting global attention. Autonomy is becoming big business. The UK's Robotics and Autonomous Systems special interest group (RAS), a branch of Innovate UK that directs research policy, has identified a world market worth $70 billion by 2020: estimates for 2025 range between $1.9 and $6.4 trillion. The group has calculated that the UK, with strengths in software engineering, signal processing and systems integration, might reasonably hope to take up to 10% of that market. Politicians keen to see the economic benefit have backed the forecasts with commitments to research funding. In 2013, the government invested £35 million as part of a capital boost for the RAS. At the time, as its main public funders, Innovate UK and the Research Councils had an RAS portfolio of over £85 million.

Global technology companies worldwide such as Alphabet, Microsoft, Amazon and even Uber, known for its market-disruptive taxi venture, are engaged in what Professor Lane termed "a feeding frenzy" of recruitment in universities worldwide as they gear up their research activities for what they see as a logical extension to current markets. Their involvement in fundamental research is indicative of high expectations but also of considerable uncertainty.

The first flight demonstrating how an unmanned aircraft can operate in all UK airspace took place in April 2013. The adapted Jetstream airliner completed a 500-mile trip from Warton, Lancashire to Inverness, Scotland under the command of a ground-based pilot and control of NATS © BAE Systems

Augmented humans

Automobiles and aircraft are already heavily loaded with sensors, processing units and controls; technologies allied to the so-called Internet of Things allow these to be wirelessly interconnected and now, increasingly, to interact with other systems. These can include driverless vehicles and unmanned aircraft, but can equally refer to systems that direct drivers to available parking spaces or link into intelligent traffic management networks to deal with congestion on roads or in the air.

Automation is about replacing unreliable humans with more controlled and controllable machines. Autonomy includes that as an option, but may also describe a range of assistive technologies that work alongside a human operator to produce better decisions, optimised and adapted for the specific conditions at the time.

Automation is about replacing unreliable humans with more controlled and controllable machines

Some autonomous systems under development extend the robotic tradition of machines doing tasks that are dangerous or that challenge humans in terms of strength, stamina or accuracy. Systems can be designed to monitor environments where this was done irregularly, if ever. For example, bridges that were inspected for structural integrity maybe twice a year by teams of engineers can now use sensors to monitor their own health continuously, and to send reports to repair crews if their processors detect anything untoward happening.

On a larger scale, engineers are working on unmanned aerial and underwater vehicles, with both military and civilian applications for these technologies. Such autonomous systems build on a long tradition of remote sensing to provide measurement in places that are off-limits for humans, such as inside aero-engines or nuclear power stations.

Pros and cons

Autonomous systems are also going into less well-signposted territory, including sectors that have not previously been associated with robotics and automation, such as retail, health and social care, and assistive technologies where the autonomous system doesn’t do the task for the human, but assists the human to do the task by adding a bit of extra muscle or memory. There is concern that very large international corporations– although they may help speed technological development – may become too influential in the process of defining the market. Their involvement could also lead to fragmented standards, to an emphasis on systems that have large commercial benefit but which do not necessarily serve the wider public interest, and to the exclusion of ideas from smaller companies and research groups.

Against these fears, however, big industries are interested in the outcome of R&D on autonomous systems. The retail sector, for example, has a long history of automation within warehousing and distribution. It has also driven changes in purchasing habits brought about through the internet. Autonomy offers the prospect of joining together these “islands of automation”.

🏪 Read about Ocado's fully automated warehouse.

Like the internet, telecoms and other technologies that preceded them, autonomous systems raise broader questions about social acceptance. There are implications in terms of a broad range of current legislation, from health and safety regulations through to individual privacy concerns. There are implications too in terms of ease of use, personal comfort and trust and the whole area of how far human beings interact with machines.

Many of these issues come to the fore in discussions about the application of assistive systems in health and social care. Professor Tony Prescott, Director of the Sheffield Centre for Robotics, has been particularly concerned with the sector known as field robotics, where autonomous systems interact with the ‘natural’ world, including contact with human beings. A large area of potential applications for autonomous systems is in the kind of assistive technology that helps to mitigate some of the effects of the health problems of an ageing population. The number of people surviving into old age is growing at a time when the numbers of those entering work are static or even falling. Economic circumstances also mean that funds for care are unlikely to grow in line with demand.

Concealed within these simple inescapable facts is a related but deeper problem: those who are surviving longer do so with an increasing range of ailments and afflictions that require treatment or monitoring. Professor Prescott sees an opportunity here for a subtle redefinition of what we mean by ‘autonomous systems’ and ‘robots’. His definitions would include interactive devices, such as those that combine an element of surveillance through sensors with some form of assistance, or that enable older people to maintain independence for longer, perhaps through self-medication, or providing reminders for the forgetful.

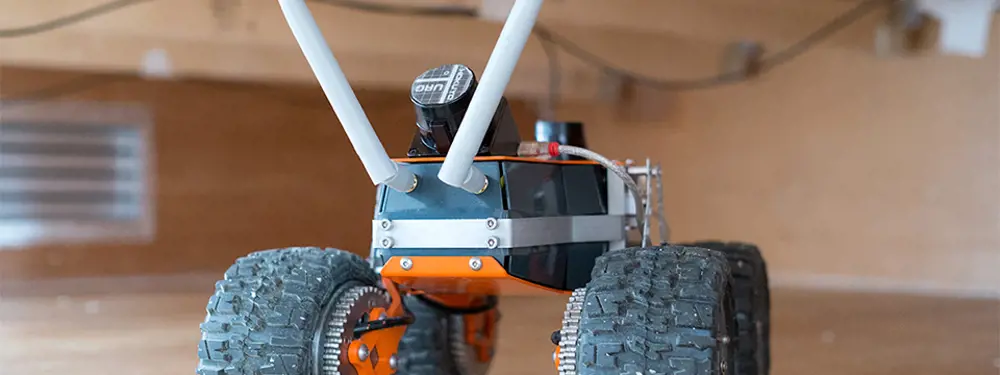

London company, q-bot, develops autonomous tools that enable installers and contractors to access hard-to-reach areas, to survey and understand these environments, building up maps and identifying services that can be provided. They can also apply treatments remotely, such as underfloor insulation © q-bot

Autonomous devices already in development at Sheffield include biomimetic physical aids and robotic exoskeletons for walking that are derived, in part at least, through sports science analysis of posture and gait. At Imperial College London, engineers and neuroscientists have been researching human motor control of joints, with the aim of creating autonomous systems to relieve muscle weakness or help with rehabilitation after a stroke. The intention is to design equipment that does not need supervision: self-administered treatment is often likely to be the norm in the resource-strapped future.

Not all frailties of age and infirmity are physical. Other kinds of autonomous systems might include adaptive furniture. The designer Sebastian Conran has, for example, has designed an ‘Intelli Table’ surface that adapts its height, format and availability according to commands programmed through a mobile phone app. Other systems under development at Imperial are linked into a discreet domestic monitoring system, combining vision technology with local devices and a communications network. Such systems might provide reminders to the forgetful or alerts to the wider world of a fall or a failure to take medicines.

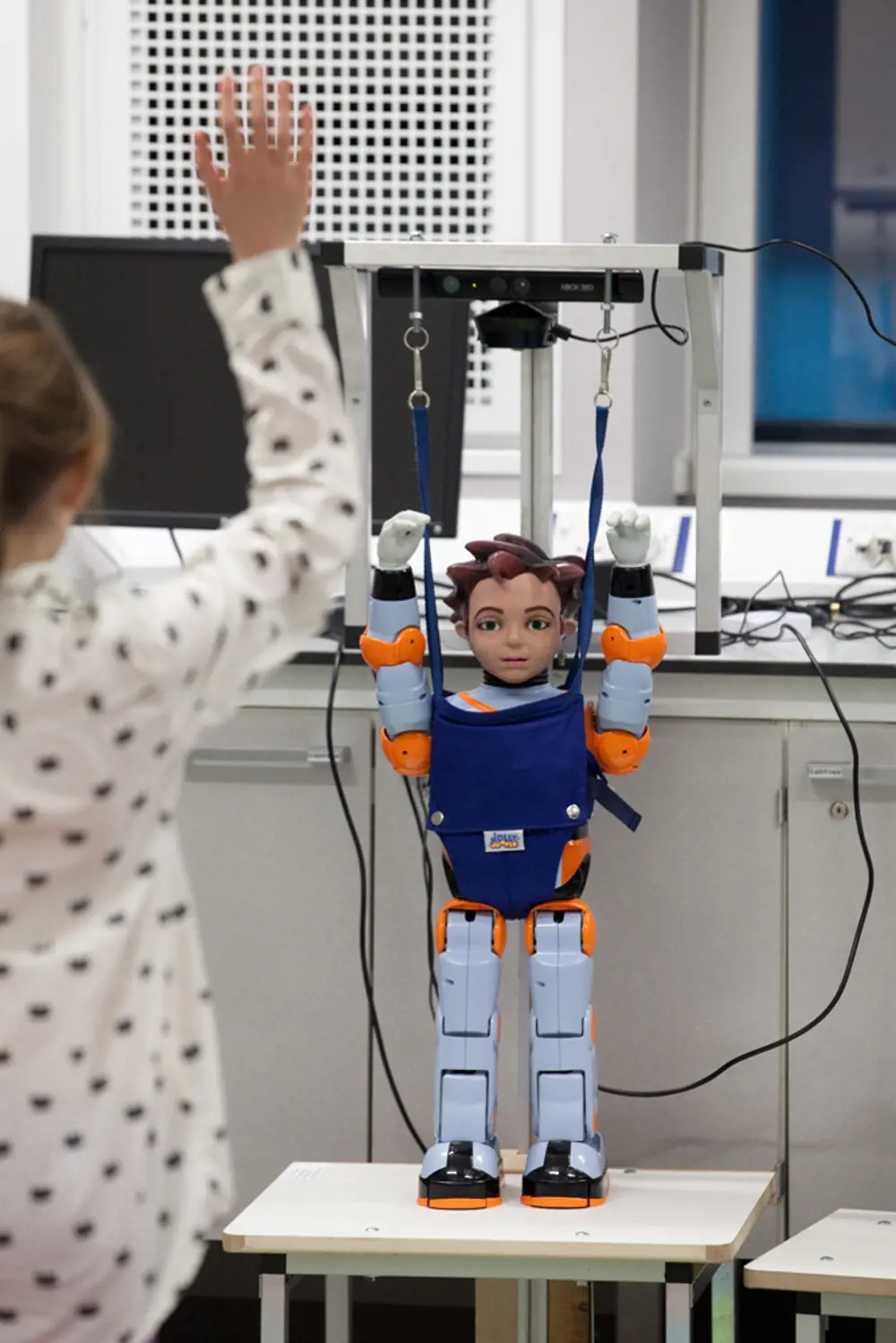

Some of these systems are what Professor Prescott terms a “social bridge”, providing not just services but also, perhaps, an element of companionship. But there remain big questions: it is now evident that domestic humanoid robots of the kinds found in science fiction are both too expensive and really not what people want. However, it is not yet clear what forms of assistive technology will be widely accepted; nor, even, how people will interact with services, for example, through a mobile phone, a television or something new.

Humanoid robotics such as the American Zenorobot are being investigated at Sheffield Centre for Robotics in roles such as therapeutic robotics, where their ability to mimic human behaviours could find them roles in physiotherapy or as classroom assistants

Taking decisions

Questions remain as to how the technology of autonomous systems might be introduced into mass markets, particularly for applications with wider public benefit. But next-generation systems will also have to tackle technical questions to be considered truly autonomous and capable of operating in the real world. Many complex human ‘decisions’ scarcely rate as decisions at all, but still have to be spelled

Professor Michael Beetz, Head of the Institute for Artificial Intelligence at the University of Bremen, believes that “the key challenge is knowledge”, not factual information but the ‘common sense’ that humans take for granted. According to Professor Beetz, “A simple instruction like ‘pour stuff from a pot’ depends on what is in the pot, and then the robot has to reason how to grab the pot, whether it’s got a lid or not, when to stop pouring. These things have to be programmed explicitly.”

The team at Bremen, with input from other robotics groups including Professor Lane’s Edinburgh centre, has been working on a universal open language for programming complex strings of instructions by ‘parameterising’ them into a database that describes real actions. However, this is only part of the work needed to make algorithms for autonomous systems that reflect real-world conditions.

The other side of the work is to teach the system when it is appropriate to apply the knowledge that it has. As humans, we do this by using what are called ‘episodic memories’, which apply context to actions and enable us to extract the appropriate response and action from those available to us. Autonomous systems gain this kind of experience through direct interaction with systems, walking through actions to learn what works when, and what doesn’t. Systems designers also make instruction manuals and web-based materials that are ‘readable’ by machines.

The long-term aim of the Bremen work is to create a single core knowledge bank in a universal language so that systems can share what they have learned with others. Just as important for enabling this to work in the real world, according to Professor Maria Fox of King’s College London, is timing.

Professor Fox’s group at King’s is working on building awareness of time constraints into autonomous systems, to teach them to act in a timely fashion, to anticipate the next action and to be aware of the consequences of their past actions on the range of actions that they might take. Algorithms that could achieve this are of a type known as “search-infer-relax”, in which the system searches for the current restrictions, infers what happens if available courses of action are pursued and considers the constraints each course of action will place on the system , and then moves onward, ‘relaxed’ in the knowledge that system integrity is being maintained.

Underlying these algorithm research projects is the fundamental difference between future autonomous systems and the robots and automation currently in existence. Today’s robots need to be able to be incrementally programmed to carry out ever more complicated tasks – to be optimised.

We have become accustomed to automation systems doing a good job in terms of being precise and for long periods of time; but the future demand will be for systems that can adapt their behaviour, and be able to do the best possible job in a wide variety of circumstances that cannot always be predicted beforehand. And that is new.

***

This article has been adapted from "Autonomous systems", which originally appeared in the print edition of Ingenia 64 (September 2015).

Contributors

John Pullin

Author

Keep up-to-date with Ingenia for free

SubscribeRelated content

Technology & robotics

When will cars drive themselves?

There are many claims made about the progress of autonomous vehicles and their imminent arrival on UK roads. What progress has been made and how have measures that have already been implemented increased automation?

Hydroacoustics

Useful for scientists, search and rescue operations and military forces, the size, range and orientation of an object underneath the surface of the sea can be determined by active and passive sonar devices. Find out how they are used to generate information about underwater objects.

Instilling robots with lifelong learning

In the basement of an ageing red-brick Oxford college, a team of engineers is changing the shape of robot autonomy. Professor Paul Newman FREng explained to Michael Kenward how he came to lead the Oxford Mobile Robotics Group and why the time is right for a revolution in autonomous technologies.

Digital Forensics

Laser scanning and digital prototyping can help the forensic investigation of crime scenes. Professor Mark Williams explains how his team’s technologies and expertise have helped solve serious crimes and aid the presentation of evidence to juries.

Other content from Ingenia

Quick read

- Environment & sustainability

- Opinion

A young engineer’s perspective on the good, the bad and the ugly of COP27

- Environment & sustainability

- Issue 95

How do we pay for net zero technologies?

Quick read

- Transport

- Mechanical

- How I got here

Electrifying trains and STEMAZING outreach

- Civil & structural

- Environment & sustainability

- Issue 95