A sense of touch for extra robotic arms

An extra arm could help us out in scenarios from the mundane to the exceptional, whether it’s hanging a picture on the wall or allowing a surgeon to apply pressure while operating with both hands. In rescue operations, firefighters could move debris while extracting injured people from underneath.

But it’s early days for the body augmentation field. One of the biggest hurdles is controlling an extra body part when it’s not already programmed into your brain.

So far, some of the best results have come from repurposing movements from another body part. In 2024, researchers showcased a third thumb controlled by wiggling the toes at the Royal Society’s Summer Science exhibition. 98% of the 600-odd members of the public who tried it out could learn how to use it in under a minute.

Both touch and proprioception provide critical two-way feedback for when we learn new movements. They’ll be vital for us to naturally, deftly operate extra limbs (and digits).

But there’s still a missing piece: feedback. If a person swings their arm and hits a wall, they’ll feel it. But if they’re wearing an extra arm and it collides with something, they won’t feel that force. We also know where our bodies are in space through proprioception, which is often thought of as a sixth sense and is crucial for coordinating movement.

Both touch and proprioception provide critical two-way feedback for when we learn new movements. They’ll be vital for us to naturally, deftly operate extra limbs (and digits). Dr Alessia Noccaro, based at the University of Newcastle, is developing a way to do this through a platform that will allow users to control and feel the robot at the same time.

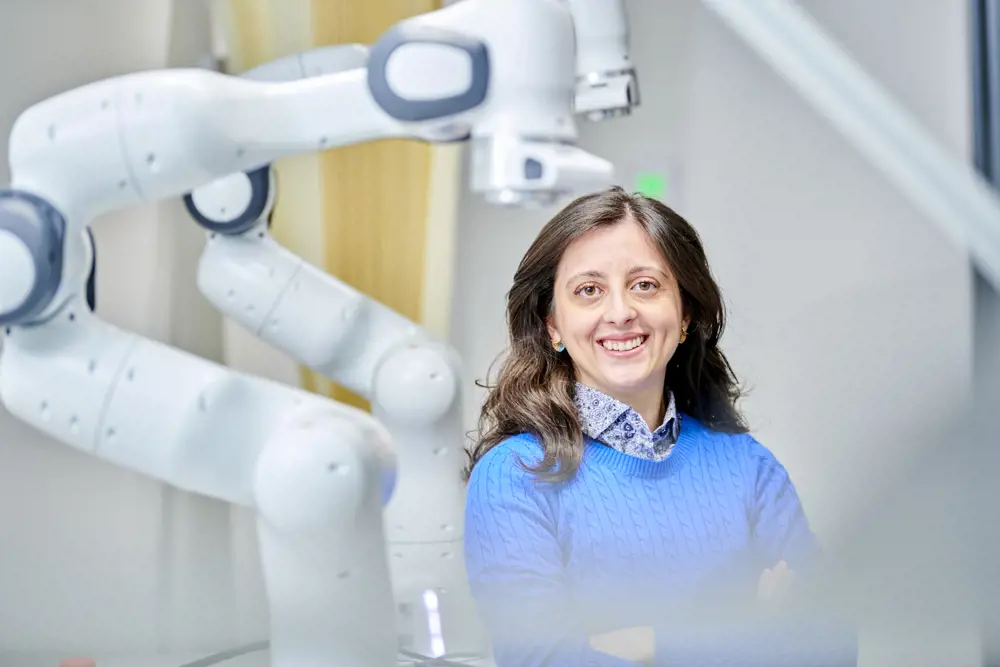

Alessia Noccaro, a Royal Academy of Engineering UK Intelligence Community postdoctoral researcher at the University of Newcastle, has a small collection of commercial robotic arms in her lab.

Reconstructing our senses of touch and space

Noccaro, a Royal Academy of Engineering UK Intelligence Community postdoctoral researcher, has a small collection of commercial robotic arms in her lab, including a lightweight arm that can be worn like a backpack. As with the third thumb, wearers can move the arm by wiggling their foot. The setup she’s developing also has two ways to provide feedback on the robot’s position in space.

One does this through small currents produced by an electrical mesh worn on the thigh, a device provided by European R&D company Tecnalia. “The sensation is between tickling and touch, so it’s not painful,” she says. “Wherever the robot moves, even if you're not looking at the robot, you can feel the robot on your leg.”

This means even if you were wearing it with your eyes closed, you know more or less where the arm is, for example, if it’s travelling right or left.

Another feedback device, worn across the thigh and calf, has six small motors that vibrate differently according to the forces felt by the robot. If the robotic arm pushes against the wall, you can feel it, as data from the sensor is transmitted to your leg. And it’s not just collisions – you can even feel air resistance, if the robot moves rapidly into free space. Heavy and lightweight robots produce different sensations, too.

Another route to understanding the brain

So far, she’s been studying simple movements, such as reaching for, picking up and moving objects. Much of her foundational work was in virtual reality – which is easier to study in the lab without the complicating factors of “mass and inertia that can also hit you”, explains Noccaro. Having moved onto hybrid experiments, she’s gearing up to publish work involving entirely real robots. The next step will be more complex (but still everyday) tasks: hanging a picture on the wall or mixing dough while adding flour.

Noccaro’s experiments have shown that people can learn how to control the robot in under five minutes. "We know the brain is highly plastic – it can change a lot,” she says. “We know it can control a third arm."

One of the things that excites her most about her work, however, is the prospect of untangling how the brain learns. Noccaro’s experiments have shown that people can learn how to control the robot in under five minutes. "We know the brain is highly plastic – it can change a lot,” she says. “We know it can control a third arm."

She likens the way the brain adapts to another limb to learning to play piano: the motor cortex, which is responsible for movement, changes and its representation of the fingers grows. “I imagine something similar would happen with an additional limb, but we don’t know yet.”

Her work could feed into this fundamental neuroscience research, too. “We have the technology, but we don’t have the means yet, because we don’t know [how] people could use it proficiently,” she says. “Understanding how the brain controls robotic limbs is the most fascinating and empowering part of the research.”

***

More in technology and AI:

🧠 The next Intel? MintNeuro is the advanced semiconductor design company unlocking less invasive neural implants

🤖 What do we need to consider before we see robots helping people at home?

🎨Can AI boost human creativity?

Contributors

Florence Downs

Author

Keep up-to-date with Ingenia for free

SubscribeRelated content

Technology & robotics

When will cars drive themselves?

There are many claims made about the progress of autonomous vehicles and their imminent arrival on UK roads. What progress has been made and how have measures that have already been implemented increased automation?

Autonomous systems

The Royal Academy of Engineering hosted an event on Innovation in Autonomous Systems, focusing on the potential of autonomous systems to transform industry and business and the evolving relationship between people and technology.

Hydroacoustics

Useful for scientists, search and rescue operations and military forces, the size, range and orientation of an object underneath the surface of the sea can be determined by active and passive sonar devices. Find out how they are used to generate information about underwater objects.

Instilling robots with lifelong learning

In the basement of an ageing red-brick Oxford college, a team of engineers is changing the shape of robot autonomy. Professor Paul Newman FREng explained to Michael Kenward how he came to lead the Oxford Mobile Robotics Group and why the time is right for a revolution in autonomous technologies.

Other content from Ingenia

Quick read

- Environment & sustainability

- Opinion

A young engineer’s perspective on the good, the bad and the ugly of COP27

- Environment & sustainability

- Issue 95

How do we pay for net zero technologies?

Quick read

- Transport

- Mechanical

- How I got here

Electrifying trains and STEMAZING outreach

- Civil & structural

- Environment & sustainability

- Issue 95