Robotic assistance in surgery operating theatres

Surgery is a three-dimensional craft skill, but one in which practitioners are sometimes hampered by an incomplete sense of what confronts them. Human tissue varies from translucent to opaque, so surgeons’ only guide to what their scalpel tips are approaching as they cut into a tissue is their anatomical knowledge of what should lie below its surface and exactly where. The process would be easier if a tissue could be rendered partially transparent, allowing the surgeon to visualise particular vessels, bones, nerves, or whatever lying beneath the surface is being cut and helping to either find or avoid them.

Although such magic is not available, it can be simulated through augmented reality. This technology is one of many being explored in attempts to boost the effectiveness and safety of surgery, whether performed by surgeons using their own hands or working through the intermediary of a robot. Imperial College London is one of the leaders in work of this kind. Its Hamlyn Centre for Robotic Surgery was established to develop innovative technologies that can “reshape the future of healthcare”; it emphasises the importance of translating ideas into patient benefits.

Ferdinando Rodriguez y Baena is Professor of Medical Robotics at Imperial College London and co-director of the Hamlyn Centre. He points out that academic bodies such as his can think broadly about tackling society’s health problems without having to focus too firmly on the financial consequences of failing to come up with something marketable. They can partially de-risk the early phase of innovation.

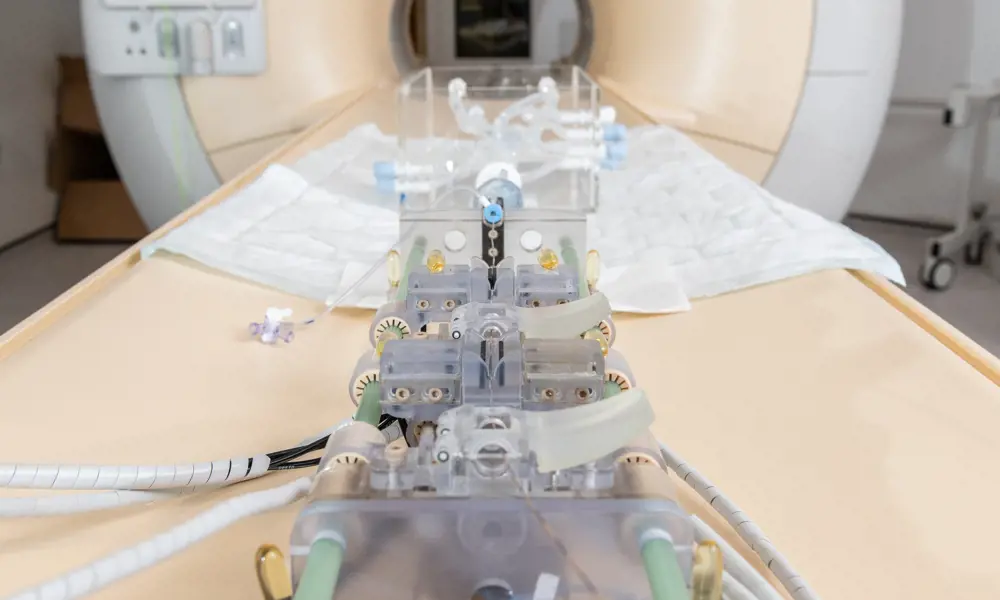

Original imaging showing the location of injuries (top); example HoloLens rendering of patient’s leg (middle); augmented reality overlays being used in clinical practice (bottom) © Images used under Creative Commons licence1

Augmented reality for surgical reconstruction

The exploitation of augmented reality by engineers at Imperial College London was originally carried out in partnership with one of its associated hospitals, St Mary’s in Paddington. As a major trauma centre, St Mary’s is accustomed to repairing limbs damaged in road traffic and other accidents. In addition to multiple fractures, these may involve soft tissue damage requiring surgical reconstruction using pieces of skin and underlying tissue, complete with their blood vessels, taken from adjacent regions of the body. For this transplanted tissue to survive and grow, its blood supply must be restored by connecting it to vessels in the region of its new home. Various methods can be used to locate these vessels, notably Doppler ultrasound machines that detect the presence of flowing blood.

Dr Philip Pratt is a senior scientist at the Helix Centre, the university’s Digital Health Innovation Lab, and formerly an associate of the Hamlyn Centre. He believes that augmented reality can make an effective contribution to some forms of complex surgical reconstruction. To test this, working with patients who had suffered severe leg injures, his medical colleagues carried out a computed tomography (CT) scan of the entire length of an injured patient’s limb. The resulting data allowed radiologist Dr Dimitri Amiras to identify and map the various structures within it – bone, blood vessels and so on – and form a 3D image of the leg in which only structures of interest to the surgeon show up.

To exploit this image in the operating theatre, Dr Pratt devised software that would allow it to be fed into a Microsoft HoloLens headset. These headsets allow the user to see the real world, overlaid with computer-generated 3D imagery – in this case, models generated from the CT scan of the patient’s leg. Once the surgeon has brought the two sets of images into alignment, they can visualise the limb as if the tissues surrounding and masking the main blood vessels have become transparent. The vessels can be more easily located.

Dr Pratt is certain that high-tech guided interventions will eventually become a routine feature in many kinds of existing and yet-to-be-invented medical and surgical procedures.

Following a pilot study of five cases, the surgical team that had used the system described the new approach as more reliable and considerably quicker than using conventional Doppler ultrasound. However, Dr Pratt believes there is still room for improvement in the system. For example, alignment of the real-world and the computer-generated images of the limb has so far been done manually by the user. In principle it should be possible for the system itself to do this, allowing the surgeon to don the headset and begin operating immediately. In the study it took about hour from the time the patient was scanned to generate the enhanced image. Dr Pratt foresees this eventually being reduced to a couple of minutes.

Dr Pratt is now a co-founder of a startup company, Medical iSight, which was created to develop augmented reality for this and other surgical procedures. One such is mechanical thrombectomy, a technique in which a micro catheter bearing a small grab is guided through an artery in the groin and up into the brain to remove a blood clot in patients who have suffered some forms of stroke. Doctors already do this using real-time X-ray guidance provide by a pair of screens showing the position of the catheter tip in the body, but the task of navigation is still challenging. By presenting the operator with a 3D image of the grab on its intravascular journey, using a HoloLens headset would make it much easier.

Dr Pratt is certain that high-tech guided interventions will eventually become a routine feature in many kinds of existing and yet-to-be-invented medical and surgical procedures.

Biological inspiration for a device used to hold organs in place

Another group of engineers, scientists and doctors with an interest in applying technology to medicine is based at the Wellcome/EPSRC Centre for Interventional and Surgical Sciences (WEISS) in University College London. The Surgical Robot Vision group, led by Professor Danail Stoyanov, is developing a device to be used in conjunction with existing surgical robots such as the da Vinci® machine (see ‘Robots in theatre’, Ingenia 58). The task they set themselves – to develop some means of holding an organ firmly and preventing it from moving while it undergoes an ultrasound scan during surgery – sounds simple, but it is not.

The group’s interest was prompted by robot-assisted partial nephrectomy, a procedure intended typically to remove cancerous tissue from a diseased kidney. The extent of the cancerous tissue is identified using an ultrasound probe introduced into the patient’s abdominal cavity via one of the trocars, through which the instruments the robot requires for the operation itself are also inserted. Inside the body, and in the grasp of robotic forceps, the probe is swiped across the surface of the kidney to generate the required ultrasound image. Although the forceps tool, which is a kind of miniature wrist with comparable range and freedom of movement, is effective at holding and manipulating the probe, the task is still challenging. In practice, probes often slip off the target and must be repositioned, which is distracting, time consuming, and one more cognitive task for the surgeon operating the robot.

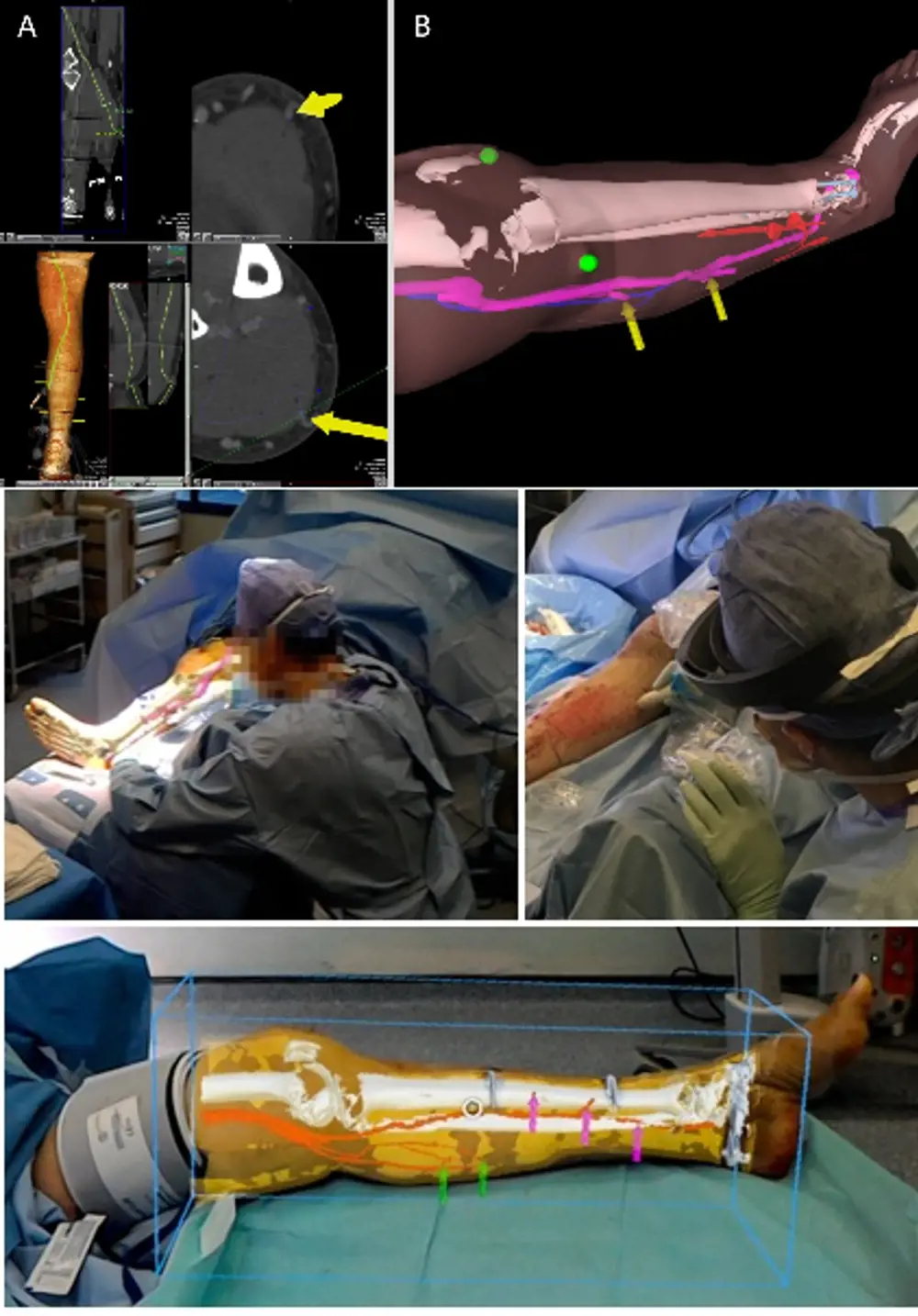

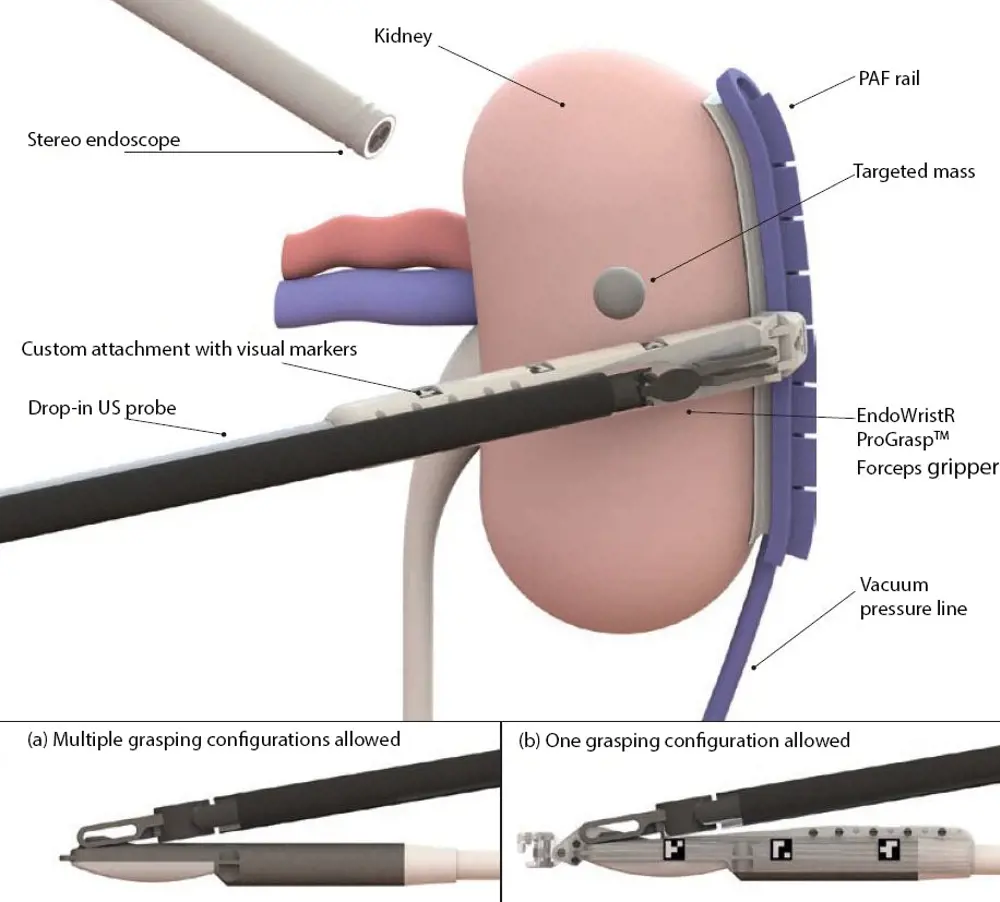

An overview of how the PAF rail system operates when used to guide a probe for robot-assisted partial nephrectomy procedures. The rail keeps the organ still allowing the probe to outline the tumour margin accurately

The group’s solution is inspired by biology and what they call a pneumatically attachable flexible (PAF) rail, which comprises a thin flexible tube attached to a vacuum source. The final ten or so centimetres of one side of the tube is fitted with a row of suckers that open out of it, while the other side carries a flat platform. Once the PAF rail has been introduced into the body cavity and manoeuvred into position on the surface of the kidney, it is held in place when suction is applied through the tube, and so to the suckers.

The device is a called a PAF rail because the perimeter of the platform is bounded by a T-shaped ridge. This acts as a rail on which the ultrasound probe can be located without slipping off, and along which its swiping movements can be directed. Moreover, the grip of the PAF rail to the kidney is sufficiently firm to use it for moving and repositioning the organ so that otherwise hidden parts of it can be brought to view.

The device is made of a rubber-like material, which has to be sufficiently flexible to bend to the curve of the kidney it is placed on, but also stiff enough so that the ultrasound probe can be coupled to the organ. To resolve this conflict, different components of the PAF rail are fabricated from variants of the material with appropriately differing degrees of hardness.

A patent has been applied for and the device has so far only been tested on animal organs. The length of the PAF rail can be chosen according to requirements, and project lead Dr Agostino Stilli can envisage it also being used for procedures carried out on other organs.

Intriguingly, the PAF rail’s capacity to adhere temporarily but firmly to an organ while causing no apparent harm is of interest to surgeons operating non-robotically

Intriguingly, the PAF rail’s capacity to adhere temporarily but firmly to an organ while causing no apparent harm is of interest to surgeons operating non-robotically. Moving or repositioning an organ by, for example, grasping it with forceps is more likely to cause injury than using a suction device. This has prompted the research group to mount a further investigation of the PAF rails simply for this very basic purpose.

Robotic devices for endovascular interventions in heart disease

Another group based at the Hamlyn Centre2 has been taking a critical look at robotic devices developed, or still in development, for endovascular interventions in heart disease. Using minimally invasive image-guided techniques such as coronary angiography, these interventions now play a key role in investigating and treating heart disease. The advent of robotics in this field is a more recent development.

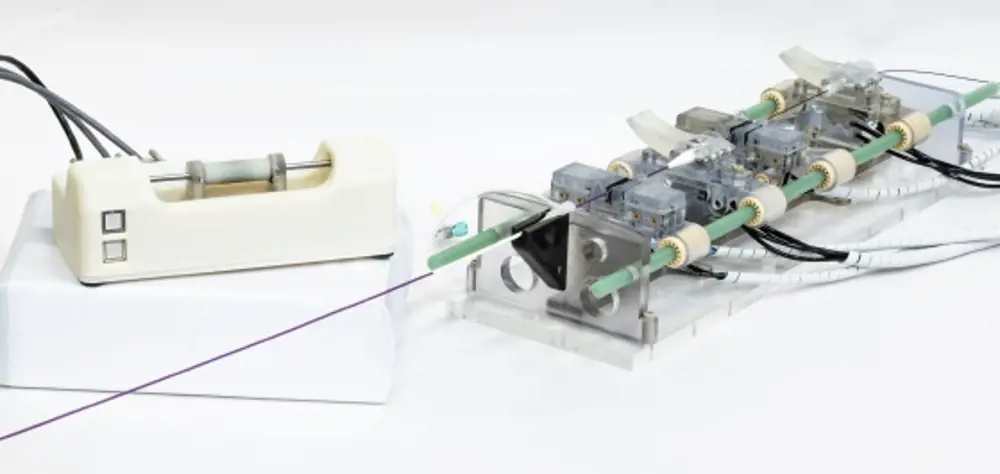

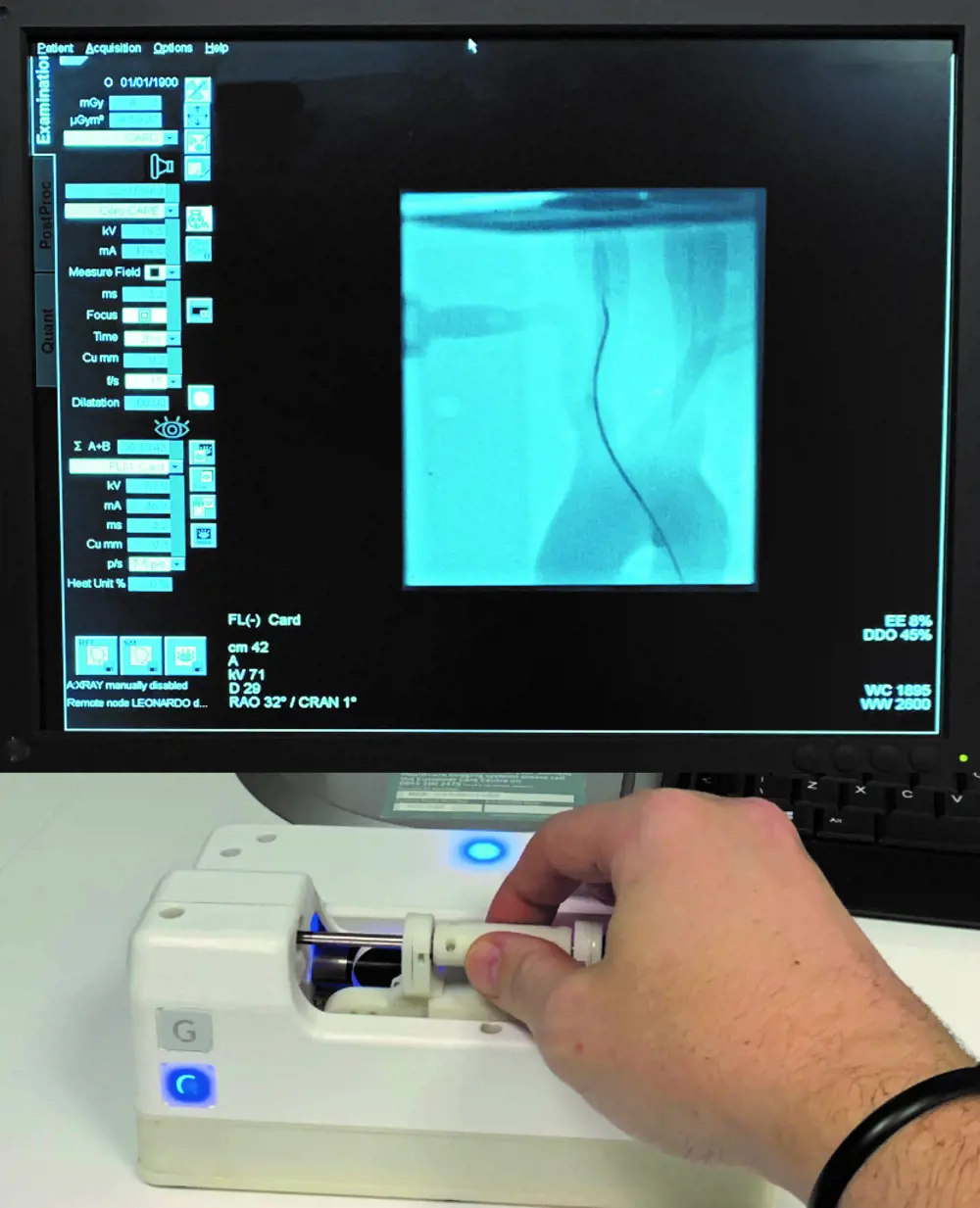

To steer their guidewires and catheters from the point of insertion in the patient’s arm or the groin, through the vascular system and into the heart, clinicians use fluoroscopic imaging, which uses X-rays to obtain real-time moving images. Staff are inevitably exposed to X-rays, despite shielding. The use of a system that has a control robot and a follower robot avoids this. Once the guidewire and catheter are inserted into the patient, staff can move to the control room where the first robot is located while the second stays in the treatment room.

The control robot (left) and follow robot (right). The latter stays in the treatment room with the patient, while healthcare professionals use the first robot in the control room to guide its trajectory © 2019 IEEE. Reprinted, with permission, from IEEE Proceedings2

When working without a robot, doctors move their guidewires using a combination of back and forth and rotational movements, monitoring the progress of the wire’s tip by watching the image on a screen. In reviewing the various designs of existing control robots, the Hamlyn team noted that the human-machine interface most often adopted for steering the guidewire is a joystick: a method of control quite unlike that of long-established manual procedures. Surgeons trained to perform these surgical interventions would have to acquire a different skill to carry them out with a robot.

When the team set out to build a robot that avoided some of what they saw as the inadequacies of those already developed, this consideration played a key role in the design. The robot’s control handle has a short round cylinder that can be slid back and forth on a horizontal rod. To instruct the pneumatically powered robot in the treatment room to move the guidewire and catheter, the handle on the control is moved forward; to rotate the wire the handle too is rotated. The finger and thumb grip, and the movements required to control the guidewire, are pretty much those used when performing the procedure non-robotically. Haptic feedback, which creates an experience of touch and is absent from most other systems that rely simply on what the operator can see on the screen, ensures that excessive and potentially damaging force to the walls of the blood vessel is never applied through the wires.

Other features of the Hamlyn team’s system include the treatment room robot’s materials being compatible with magnetic resonance imaging; that the system can be used with any of the catheters and guidewires generally available; and that switching from robotic to manual control is quick and simple. The team reports good feedback from vascular surgeons who have tested the system using a full-scale model of heart blood vessels and in animal trials, but human trials are still some way off.

The robot’s control handle can be moved forwards and backwards and rotated to guide the treatment room robot’s wires through blood vessels © Hamlyn Centre robot-assisted endovascular intervention technologies research group

The future of robotic assistance in theatre

According to Professor Stoyanov, in the next 10 or 20 years, robots, robotic assistance and associated novel gadgetry along with new imaging technologies are going to become ubiquitous in operating theatres. Professor Rodriguez y Baena says that robotic surgery experienced a peak of development in the 2000s, which decreased towards the end of the decade.

Now, interest in novel devices that can improve the safety and efficacy of robotic operating systems, or make them more versatile, is once again on the increase. Although current practice in robotic surgery is still dominated by operations on the prostate gland and the kidney, surgeons are already exploring its benefits in procedures ranging from the treatment of rectal cancer to the repair of heart valves. The greater the need for precise cutting and sewing, and the more inaccessible the tissues requiring attention, the greater the benefits of a successful robotic intervention. With the ever-accelerating rate of technological advance in software, especially in machine learning, the progress of robotic and other high-tech surgical assistance may prove unstoppable.

***

This article has been adapted from "Robotic assistance", which originally appeared in the print edition of Ingenia 83 (June 2020).

Contributors

Geoff Watts

Author

Dr Philip Pratt is a Senior Research Fellow at Imperial College London’s Helix Centre and Co-Founder of Medical iSight. He is at the forefront of research into image-guided surgery, and has translated new technology into clinical practice.

Professor Ferdinando Rodriguez y Baena is Professor of Medical Robotics at Imperial College London and Co-Director of the Hamlyn Centre for Robotic Surgery.

Dr Agostino Stilli is an Assistant Professor in Medical and Soft Robotics at University College London, Co-Investigator in the Surgical Robot Vision group and a Rosetrees Enterprise Fellow. He also founded the business CYCL which specialises in cycling accessories to improve cycling safety.

Professor Danail Stoyanov is a Professor in Computer Science at University College London specialising in robot vision for surgical applications. He is also a Chief Scientist at Touch Surgery and a RAEng/ESPRC Research Fellow at University College London.

Dr Giulio Dagnino is an Assistant Professor of Medical Robotics for the University of Twente. At the time of writing, he was a Research Associate at the Hamlyn Centre for Robotic Surgery, Imperial College London, working on robot-assisted endovascular intervention technologies. He has spent more than twelve years working on image-guided robotic surgery.

Keep up-to-date with Ingenia for free

SubscribeRelated content

Technology & robotics

When will cars drive themselves?

There are many claims made about the progress of autonomous vehicles and their imminent arrival on UK roads. What progress has been made and how have measures that have already been implemented increased automation?

Autonomous systems

The Royal Academy of Engineering hosted an event on Innovation in Autonomous Systems, focusing on the potential of autonomous systems to transform industry and business and the evolving relationship between people and technology.

Hydroacoustics

Useful for scientists, search and rescue operations and military forces, the size, range and orientation of an object underneath the surface of the sea can be determined by active and passive sonar devices. Find out how they are used to generate information about underwater objects.

Instilling robots with lifelong learning

In the basement of an ageing red-brick Oxford college, a team of engineers is changing the shape of robot autonomy. Professor Paul Newman FREng explained to Michael Kenward how he came to lead the Oxford Mobile Robotics Group and why the time is right for a revolution in autonomous technologies.

Other content from Ingenia

Quick read

- Environment & sustainability

- Opinion

A young engineer’s perspective on the good, the bad and the ugly of COP27

- Environment & sustainability

- Issue 95

How do we pay for net zero technologies?

Quick read

- Transport

- Mechanical

- How I got here

Electrifying trains and STEMAZING outreach

- Civil & structural

- Environment & sustainability

- Issue 95