The technology behind ‘The Tempest'

‘On a ship at sea: a tempestuous noise of thunder and lightning heard.’ From this opening stage direction of William Shakespeare’s The Tempest, it is clear that the audience is in for a night of electrifying special effects to match the actors’ performances. Packed with dramatic storms, a shipwreck and powerful magic, it is perhaps one of the trickiest of the playwright’s works to translate from the page to the stage. Written just six years before his death, it has more stage directions than any of Shakespeare’s other plays.

Packed with dramatic storms, a shipwreck and powerful magic, it is perhaps one of the trickiest of the playwright’s works to translate from the page to the stage

Even when performed during Shakespeare’s life, the play was a feast of special effects and backstage technology: wheels of canvas spun at high speed and fireworks were used to recreate the sounds of a raging storm; trapdoors made characters disappear; false tabletops made props vanish; and cast members were carried aloft by elaborate pulley systems. While the techniques available to directors have advanced considerably since then, recreating Shakespeare’s vision in a live theatre performance has always remained a challenge. Now, four centuries after his death, modern technology may finally be giving theatre-goers a glimpse of the play as Shakespeare originally imagined it.

Now, four centuries after his death, modern technology may finally be giving theatre-goers a glimpse of the play as Shakespeare originally imagined it

In its latest production of The Tempest, the Royal Shakespeare Company (RSC), in collaboration with Intel and Imaginarium Studios, has used motion capture – a technique that uses cameras and computers to track an actor’s movements – live on stage to create a three-dimensional (3D) digital character in front of audiences.

This latest production has just finished a successful run at the RSC’s home in Stratford and is due to be shown at the Barbican in London this summer. In the adaptation, the character of Ariel is performed live on stage while motion-capture sensors embedded in the actor’s suit turn his movement in real time into a 3D animation that prances, dances and flies above the stage. It allows Ariel to transform into a sea nymph or a terrifying harpy at the command of the wizard Prospero, with the digital character integrated throughout the whole performance.

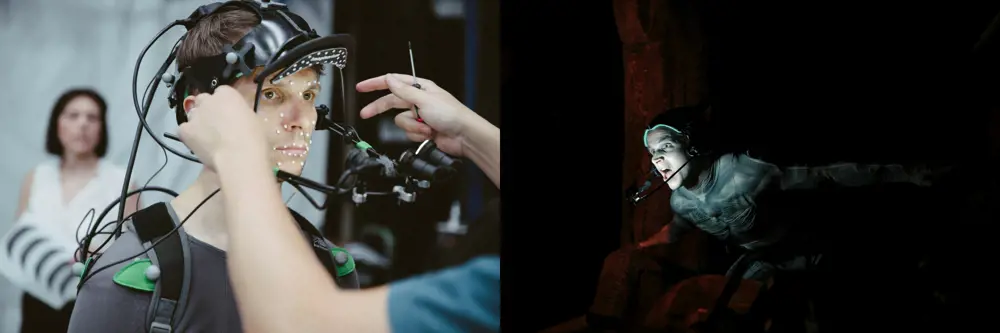

Motion capture technology involves actors wearing suits covered in reflective markers that pick up their movements © Intel

Capturing the action

Motion capture itself is not all that new. It has been 16 years since Andy Serkis first appeared on cinema screens as the snarling and snivelling animated character Gollum in The Lord of the Rings trilogy. His groundbreaking performance in the films brought motion capture to the attention of the world. Serkis wore a special suit covered in reflective markers so that the filmmakers were able to track his movements as he acted out the part of Gollum opposite other cast members. This was then used to map his movements onto the computergenerated Gollum that appeared in the final film.

However, motion capture was not invented or first used by the film industry. It was initially developed in the 1970s as a tool to help analyse how a person walks during rehabilitation sessions following an injury. Healthcare professionals could use cameras to track markers placed on a patient’s limbs to precisely model their gait and look for defects. In the mid-1990s, the video games industry saw the potential of motion capture to help it to create more lifelike characters in computer-generated worlds. However, after Serkis’s acclaimed performance in The Lord of the Rings, the technology’s use in the film industry has exploded. Serkis himself has since played dozens of computer-generated characters with the technology in movies including King Kong, Rise of the Planet of the Apes and Star Wars: The Force Awakens.

[motion capture] was initially developed in the 1970s as a tool to help analyse how a person walks during rehabilitation sessions following an injury

Traditionally, motion capture has been carried out using passive reflective markers that bounce infrared or near-infrared light back to an array of up to 60 specially adapted cameras. These reflective markers are placed near the joints of an actor’s body, allowing the system to pinpoint the position of each body part relative to each other. Mathematical models are then used to map these onto a digital skeleton to replicate the actor’s movements on a computer. A computer generated character, or avatar, can then be superimposed upon this skeleton to drive its movements.

In movies and computer games, actors can perform in specially built studios, wearing suits covered in these reflective markers. In the studios, they are shielded from rogue lighting that may interfere with the cameras or electrical interference that could throw off the tracking. There is time to deal with glitches in the software that can mean lengthy computer restarts, while errors in the avatar itself can also be fixed in the production process. There is no such luxury on the stage.

Illusions on stage

Adapting a technique that is usually used in feature films with long production times to a live stage performance was no easy task. The technology had to be robust enough for eight shows a week in a live environment and work with all of the other technologies used on stage. Initially, the team had imagined projecting the character of Ariel onto the stage while actor Mark Quartley, who plays the ‘airy sprite’ in the production, would perform the role in a room backstage. It is something that had been done before during Shrek on Broadway, when motion capture was used to let an actor perform as the Magic Mirror from backstage. While this would have been a controlled environment where a specialist team could have been in charge of everything that happened, the production’s director, Greg Doran, decided that it was important to have the actors acting opposite each other.

While rehearsals went ahead, more than a year’s worth of research and development was undertaken to solve the problem. Sarah Ellis, Director of Digital Development at the RSC, asked Tawny Schlieski, Director of Desktop Research at Intel, to help after seeing a video of a computer-generated 1,000-foot whale that Schlieski and her team had created to virtually ‘fly’ over the audience at a consumer electronics show in 2014. Schlieski and her team’s big digital work had mostly been one-offs until that point. With their work on The Tempest, they needed to take the technology and turn it into something where Quartley could turn up just before the show, put on a suit and it would work. The RSC and Intel turned to Serkis’s performance capture and production company, Imaginarium Studios, for help transferring the motion-capture technology onto the stage.

Four different computer systems were custom built to create the avatar and the different looks of the character of Ariel were created using advanced graphics software, Unreal Engine. The different versions of the character are mapped onto a three-dimensional model of actor Mark Quartley’s body, built using data picked up from the motion-capture sensors © Stephen Brimson Lewis (left image) © Intel (right image)

One option was to use active markers on the costume rather than passive reflective ones. These use tiny light-emitting diodes that pulse light at a high frequency rather than relying upon light bouncing off the markers. This means that the cameras can be placed up to 40 metres away rather than just 10 metres with the reflective markers, something that would be much more practical in a live stage performance. The only problem with this was that Quartley would have had glowing red dots on his body throughout the performance.

Fortunately, the fast pace of technology intervened with the release of new miniaturised wireless sensors that use a combination of gyroscopes, accelerometers and magnetometers to capture motion in three dimensions. These sensors, known as inertial measurement units, were built into the fabric of the figure-hugging bodysuit that Quartley wears during the play. The technology was initially used to help manoeuvre aircraft and guided missiles, but has now shrunk to the point where it cannot be seen on his body. The gyroscopes help to detect changes in rotation and pitch, while the speed of the movement is detected by the accelerometers. The magnetometers help to calibrate their orientation. In total, 17 sensors were attached to the suit: one on each hand, one on each forearm, one on each upper arm and so on. Each sensor was tethered to a wireless transmitter worn by Quartley that would stream the data back to a router, allowing the engineers to calculate the rotations of his joints relative to each other and get the joint angles. The process is not quite as accurate as using an optical system, but was not problematic as the actor did not need to be tracked around the stage.

the resulting digital avatar of Ariel has 336 joints that can be animated, which is almost as many as the human body. Powering Ariel’s avatar requires more than 200,000 lines of code to be running at the same time

Instead, the team focused on tracking the movement of his upper body and mapping this onto the digital characters beamed above the stage. Actions such as making the creature look as if it was flying or swimming could be achieved using pre-programmed computer graphics. Even so, the resulting digital avatar of Ariel has 336 joints that can be animated, which is almost as many as the human body. Powering Ariel’s avatar requires more than 200,000 lines of code to be running at the same time. All this takes some formidable computing power.

Unreal engine

🕹️ The application of 3D video gaming graphics for The Tempest production

First created by video games developer Epic Games in 1998, the Unreal Engine technology has become one of the most popular tools for creating realistic three-dimensional graphics for games. It features a set of software tools written using the C++ programming language that allow users to animate digital characters, build virtual worlds and replicate realistic physical interactions between animated objects.

For The Tempest, designers were able to use the software’s powerful animation system to create and control a skeleton around which the body of Ariel could be built. The software allows scans to be imported, so for The Tempest, body scans of the actor’s body were used.

Other systems within the games engine are then used to add ‘flesh and skin’ to the skeleton and rendering these realistically by adding colour, texture, lighting, shading and geometry.

Unreal also features a physics engine, which performs calculations for lifelike physical interactions such as collisions, bouncing or movement of fluids. This can help ensure that characters do not simply pass through walls, for example, and was instrumental in ensuring Ariel moved in a realistic way. Lighting systems also allow scenes and characters to be lit in a realistic fashion so that, as they move, the light source remains consistent.

Imaginarium’s designers used Unreal Engine to set a series of rules about how characters will be controlled. In a computer game, this can be used to determine how certain buttons used by a player will cause a character to behave, but for the stage it allowed Ariel to be puppeteered by the actor.

Character from a computer

Intel had to custom build four different computer systems to cope. The first purely receives the data from the sensors in Quartley’s suit and then passes it to another machine that maps the data onto a digital 3D model of Quartley’s body, built up using body scanning. This is then superimposed onto the digital avatar using advanced graphics software known as Unreal Engine, developed by Epic Games for use in highend video games. This allowed designers to play with the look of the character so that Ariel can appear in different guises throughout the play. Another computer system drives the theatrical control system, ensuring that the graphics produced by the Unreal Engine appear on the right part of the stage at the right time. A final machine powers the video server that is connected to the 27 high-definition laser projectors that show the final images on the stage.

Intel built two identical machines that ran in parallel so that if there was a problem, such as a glitch in the Unreal Engine that would need a restart, the computer engineers could switch to the other machine, which would be in sync. This means that there were eight computer systems whirring away in the theatre to produce the digital character of Ariel. The computers driving the projectors had 120 Intel i7 cores placed in server racks – a machine staff nicknamed the ‘Big Beast’.

To capture actor Mark Quartley’s facial movements in real time, Imaginarium built a system to attach a motion-capture camera to his head. Special make-up is used to highlight key parts of his face and the movements are then fed into the computer system and reproduced on his digital avatar © Intel

One of the first challenges that the Intel team faced was making sure that the hardware did not melt, which meant keeping the computers away from the sweltering glare of the lights and using a lot of fans to keep them cool. In a theatre performance, which relies on keeping the attention of the audience on the stage, the buzz of these fans and hum of the computers could have been a distraction. Each of the computer servers had to be packed with soundproofing and moved as far away from the stage as they could be. The team also faced another noise problem: the 27 projectors sitting over the heads of the audience would create a cacophony of noise that would be hard to ignore. However, just months before the performance opened, they got their hands on some new laser projectors that could turn on and off silently.

The team developed makeup for Quartley that would highlight his lips, eyes and other key parts of his face to ensure that the camera could pick up his movements and feed them to the computer system

Imaginarium also built its own system for capturing the facial movements of Quartley in real time for a scene during Act III of the play, when Ariel is sent as a harpy to terrify the shipwrecked lords. The team created a contraption similar to a cycle helmet for Quartley to wear on his head during the scene. A metal bar extends from either side of his head to hold a motion-capture camera 20 centimetres away from his face. The team developed makeup for Quartley that would highlight his lips, eyes and other key parts of his face to ensure that the camera could pick up his movements and feed them to the computer system. They also developed algorithms that learned to recognise human faces before training the software on Quartley’s own face over several months as he rehearsed the scene. This allowed the designers to work out what the facial expressions Quartley produced would look like on the harpy.

In a neat reversal, Imaginarium expects to use the real-time facial technology developed for The Tempest in future feature film productions to allow directors to see their actors’ performances rendered onto digital characters on set rather than months later.

The digital avatar is projected onto 14 curved gauze screens that fly in and out of the stage while in the centre there is a gauze cylinder filled with smoke known as the ‘cloud’

The production’s set features an elaborate gauze cylinder in the middle of the stage that uses the motion-capture technology to ensure that projections, lighting and spotlights are synchronised © Royal Shakespeare Company/Topher McGrillis

The performance of Ariel is not the only motion capture used during the play. The digital avatar is projected onto 14 curved gauze screens that fly in and out of the stage while in the centre there is a gauze cylinder filled with smoke known as the ‘cloud’, with each screen tracked using optical motion-capture cameras and software. Bespoke software was created by Vicon – a motion-capture technology specialist – and D3 – a video server firm – to track the screens as they moved around. This data is superimposed onto a virtual map of the stage to help ensure that the images are projected onto the right spot, while also guaranteeing that the lighting software and spotlights work in sync with the projections. This system is also used to create other visual effects seen during the performance, including projecting images of dogs onto drums carried by spirits as they chase some of the characters.

To help the production team each night, engineers at the RSC also built controls into the lighting control console used in the theatre so that they could change Ariel’s appearance using faders. What they created was a sort of ‘avatar mixing desk’, which made Ariel disappear or reappear with an analogue fader, change his colour or appearance, or set him on fire and control how much fire. In the end, much of the appearance of Ariel’s digital avatar was automated with cues at Stratford, but the engineers hope to make more use of the mixing desk approach when the show starts in London.

Ironing out problems

The final performance is not without its problems. Critics have commented on noticeable lip-synching issues when Ariel sings or speaks and there are also difficulties with the delay that occurs as Quartley’s movements are crunched into bits and then back into images, as the games engine, each video server and the projectors all have frames of latency, which cover the time from rendering to display.

Due to the tight production schedule of the RSC, the cast did not begin rehearsing on the final stage until a few weeks before the play opened and there were still some final problems to be ironed out. The very large metal structures within the theatre building meant that there were dead spots where the suit would not track properly as the gyroscopic sensors reacted to the metal, so Quartley had to remap his performance to avoid them.

A technical team also waits on standby in the wings during each performance, ready to recalibrate Quartley’s suit when he comes off stage between scenes. Quartley also faced other problems in the final days of rehearsals. The graphics team were tweaking and updating the way the digital character moved almost continually, which meant that he had to change his onstage movements as well to get the avatar to do what he wanted.

The real achievement of The Tempest, however, may be to transform the way that stories can be told

The real achievement of The Tempest, however, may be to transform the way that stories can be told. Ben Lumsden, Head of Studio at Imaginarium, believes that this sort of live motion capture could be adapted to all sorts of events, such as live music performances and at theme parks, where performers wear sensors under their clothes. It could also be adapted for use by the business world; Microsoft and NASA have already experimented with using live avatars at meetings in place of individuals who are not able to be physically present. The medical industry is also looking at whether the technology could be used in remote consultations between doctors and their patients. It would allow doctors or surgeons to examine a patient in far more detail than might be possible over a simple video link.

For Shakespeare, The Tempest was an Elizabethan technological feast that would use state-of-the-art effects to bring his words alive. Now, 400 years later, modern technology has brought even more magic to the stage.

***

This article has been adapted from "The technology behind The Tempest", which originally appeared in the print edition of Ingenia 71 (June 2017)

Contributors

Richard Gray

Author

Sarah Ellis is Director of Digital Development at the RSC. As a theatre and spoken word producer, she has worked with venues including the Old Vic Tunnels, Battersea Arts Centre, Southbank Centre, Soho Theatre and Shunt.

Ben Lumsden is an Entertainment Technology Consultant. At the time of publication, he was Head of Studio at Imaginarium Studios. He started working on motion capture on the film District 9, and his film credits since have included Avengers: Age of Ultron, Dawn of the Planet of the Apes and Star Wars: The Force Awakens.

Tawny Schlieski is the Founder of Shovels and Whiskey and the Owner of Four Forty Six. At the time of publication, she was the Director of Desktop Research at Intel Corporation, where she was a research scientist and media expert in the Intel Experience Group, and her work centred on new storytelling capabilities enabled by emergent technologies.

Keep up-to-date with Ingenia for free

SubscribeRelated content

Arts & culture

How to maximise loudspeaker quality

Ingenia asked Dr Jack Oclee-Brown, Head of Acoustics at KEF Audio, to outline the considerations that audio engineers need to make when developing high-quality speakers.

Engineering personality into robots

Robots that have personalities and interact with humans have long been the preserve of sci-fi films, although usually portrayed by actors in costumes or CGI. However, as the field of robotics develops, these robots are becoming real. Find out about the scene-stealing, real-life Star Wars droids.

Design-led innovation and sustainability

The Stavros Niarchos Foundation Cultural Center, the new home of the Greek National Opera and the Greek National Library, boasts an innovative, slender canopy that is the largest and most highly engineered ferrocement structure in the world.

From junk to spectacle

Synonymous with Glastonbury Festival, where it attracts thousands of partygoers each evening, the 15-metre-high Arcadia ‘Spider’ is an impressive, if unusual, example of engineering. Find out how the Spider was created.

Other content from Ingenia

Quick read

- Environment & sustainability

- Opinion

A young engineer’s perspective on the good, the bad and the ugly of COP27

- Environment & sustainability

- Issue 95

How do we pay for net zero technologies?

Quick read

- Transport

- Mechanical

- How I got here

Electrifying trains and STEMAZING outreach

- Civil & structural

- Environment & sustainability

- Issue 95